.png)

September 17, 2025

Category:

Physical AI

Read time:

14 minutes

Share This:

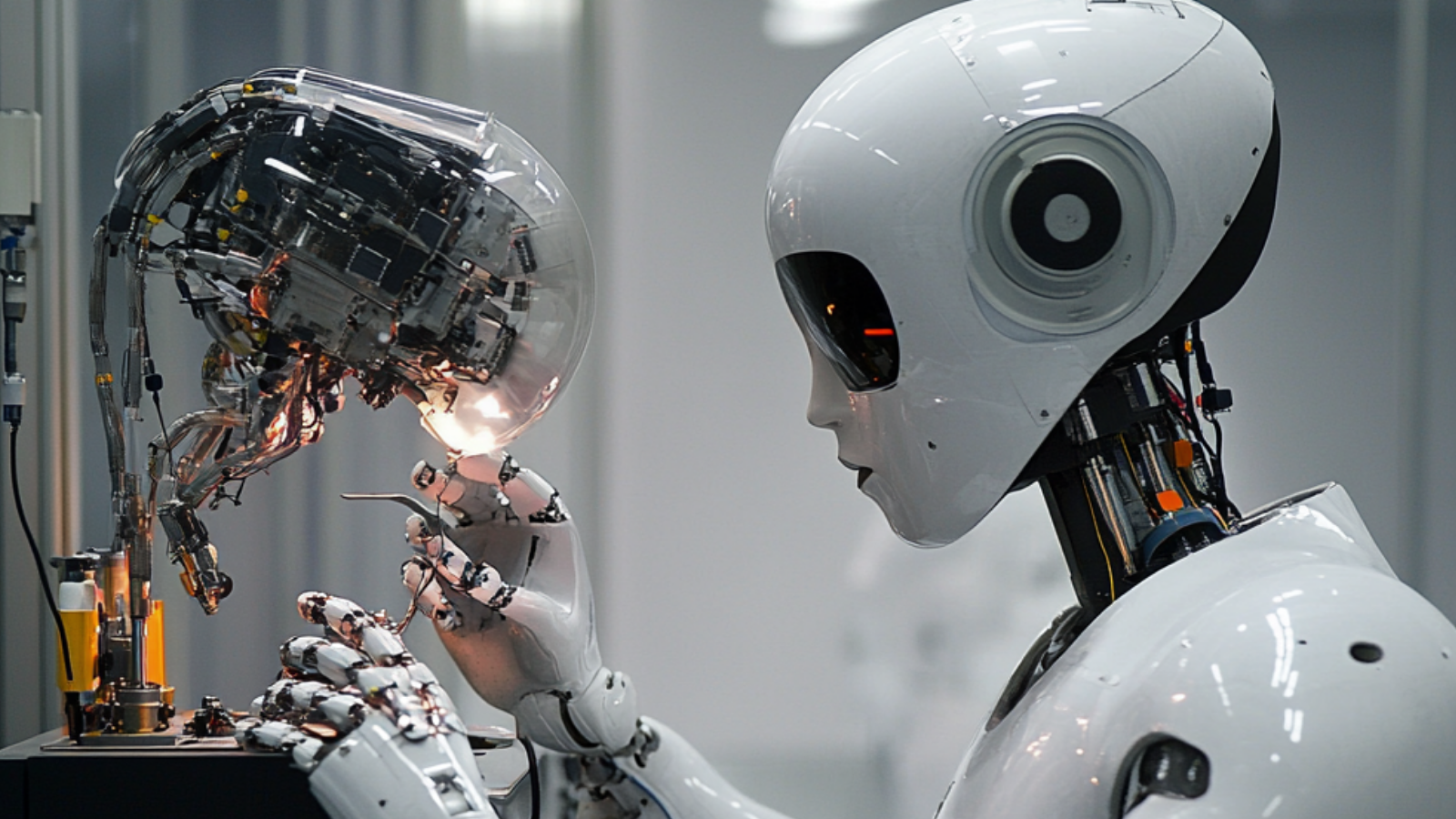

Vision–language–audio (VLA) models aim to endow humanoid robots with human-like perceptual capabilities. Yet audio is often sidelined in favor of vision and language. This paper explores the potential role of audio as a complementary modality, drawing on lessons from autonomous vehicles where microphone arrays detect non-line-of-sight hazards such as emergency sirens.

We argue that while audio is not a silver bullet, it can fill perceptual gaps and provide robustness in occlusion-heavy environments. We examine both the opportunities and challenges of crowdsourced audio data collection through decentralized platforms like Silencio, and propose a tiered sound corpus for embodied AI. Our goal is not to present audio as a standalone driver of humanoid robotics adoption, but as a practical addition to multimodal systems that, if developed thoughtfully, may enhance safety, intuition, and adaptability.

Introduction

Large multimodal models excel at interpreting images, processing spoken commands, and reasoning in natural language. However, their ability to perceive non-verbal environmental sounds remains underdeveloped. Humans rely on audio for subtle but important tasks: detecting approaching footsteps, inferring intent from vocal tone, or distinguishing a quiet office from a bustling café.

For humanoid robots, neglecting audio risks perceptual blind spots that vision and language alone cannot resolve. That said, the relative importance of audio differs across domains. In household and service environments, it may augment safety and user interaction. In industrial or logistics contexts, it could help detect hazards or localize instructions.

Lessons from autonomous vehicles

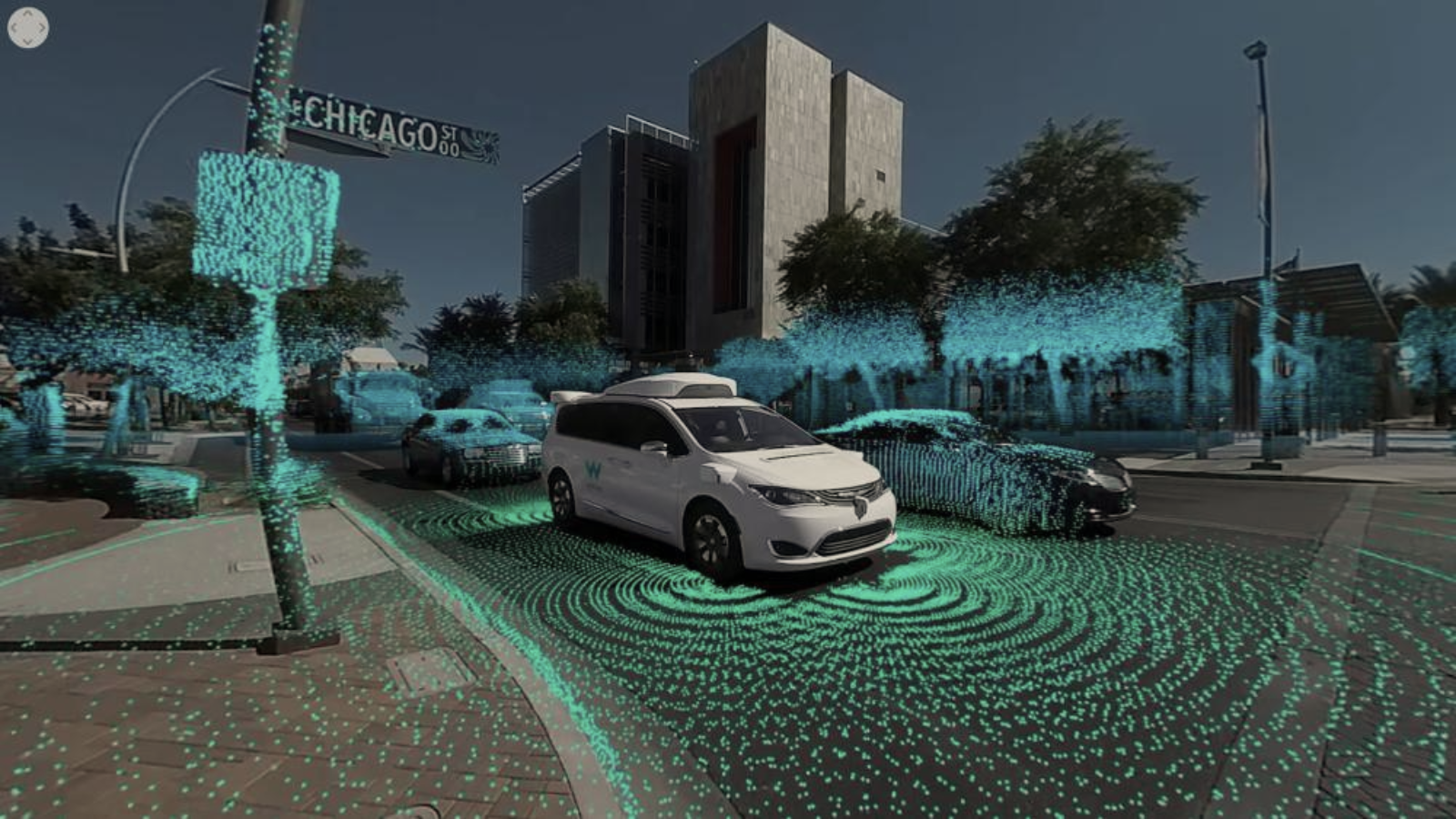

Autonomous vehicles demonstrated that vision and LIDAR alone could miss occluded events, such as emergency vehicles. Microphones added a 360-degree acoustic “field of view.” For example, Waymo’s 2017 tests in Chandler, Arizona, built a sound library enabling vehicles to detect sirens from beyond visual range. These systems required not just recognition but also fast localization with sub-50 ms latency.

XMAQUINA’s DEUS Labs believes that there is value in harnessing decentralized, scalable audio data collection. Through decentralized networks like Silencio, which leverages smartphone-based decentralized physical infrastructure networks (DePIN) to crowdsource ambient and object-related sounds, there is value in collaborating on open comprehensive audio datasets and robust models that empower robots with human-like acoustic awareness.

This paper outlines how these efforts, combined with lessons from automotive audio systems, can address the growing role of audio in Embodied AI.

Why humanoid robots need ears

Humanoid robots already use microphones for speech-to-text processing, but their understanding of non-verbal acoustic signals, such as alarms, environmental cues, or mechanical sounds, remains underdeveloped.

Robust audio perception is important for three reasons:

- Non-line-of-sight sensing: Sounds such as sirens, breaking glass, or a dropped tool can indicate hazards or events beyond a robot’s visual field, allowing for proactive intervention. On the more everyday side, audio can enable responsiveness when a humanoid’s owner calls for assistance from another room or even a different floor—similar to how a whistle draws a dog’s attention.

- Disambiguation of language: Instructions like “follow the beeping forklift” link spoken words to acoustic cues, grounding language in the environment.

- Self-supervised learning signal: Audio-visual correspondence provides millions of free training labels, as co-occurring frame–waveform pairs form natural contrastive examples.

Still, it is important to note that not all applications require these capabilities, and in some cases visual sensors, radar, or tactile feedback may provide more reliable inputs than microphones. Yet, if the goal is to bring humanoids closer to human-level perception of their environment, then developing robust ways to interpret and reason about the audio channel becomes indispensable.

Self‑Driving Cars Paved The Way

Early autonomous vehicle prototypes from Waymo and others revealed that vision and LIDAR alone could miss emergency vehicles occluded by traffic or buildings. Microphones provided a 360-degree acoustic “field of view.” For example, Waymo’s 2017 tests in Chandler, Arizona, created a sound library enabling minivans to detect sirens from twice the visual range and estimate their direction for yielding decisions waymo.com. This required not just recognition but localization with under 50 ms latency.

Hardware Lessons

Autonomous-vehicle microphones must withstand harsh conditions—rain, dust, road salt, and pressure-washer blasts. Bosch’s sealed MEMS capsules, mounted behind body panels, use onboard neural filters to isolate sirens from wind or engine noise bosch.com. Renesas demonstrates that distributed sub-arrays at roof corners and bumpers enable precise triangulation at low cost renesas.com.

Algorithms and Benchmarks

Academic research supports industry findings. A 2021 study achieved 99% recall and <10 m localization error for sirens using two low-cost microphones arxiv.org. A 2025 MDPI Sensors paper advanced this with adaptive beamforming and transformer-based classifiers optimized for highway speeds mdpi.com. Open datasets like US8K-AV and UrbanSound8K-AV provide annotated street-level audio for training embedded models pmc.ncbi.nlm.nih.gov.

Safety and Regulation

EU regulation 2019/2144 mandates that level-3+ automated vehicles detect emergency vehicles acoustically. Audio is thus a legal and technical necessity in automotive applications. Humanoid robots in shared spaces, sidewalks, warehouses, or hospitals, could face similar regulatory requirements.

Building A Sound Corpus for Humanoid Robots

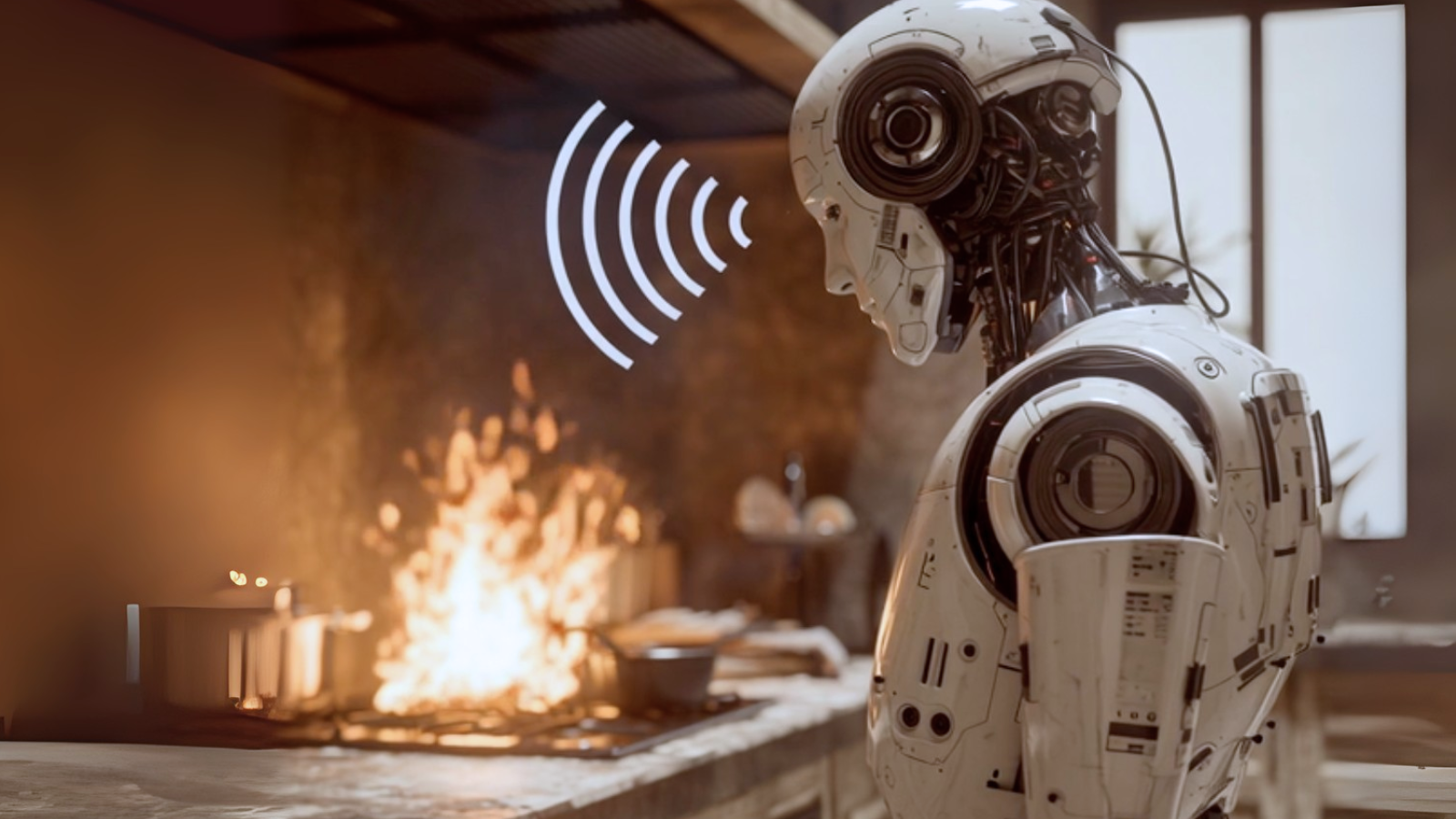

Humanoid robots, especially the ones that will serve at home, will operate in cluttered, occlusion-heavy environments where audio cues are vital: a kettle whistling behind a cupboard, a colleague calling from another room, or an alarm signalling danger.

The sensor stacks developed for vehicles, which enable cars to hear ambulances, can empower robots to anticipate humans, align gaze with speakers, or detect hazards before visual confirmation. The hardware to allow robots to hear very efficiently is already well developed and will keep on improving with lower and lower costs.

However, humanoid robotics companies will have to develop their own datasets of sounds and audio experience in order to seed generalized audio embeddings for embodied AI, leveraging transfer learning to accelerate development.

The Approach

Robust audio perception is critical for humanoid robots to achieve human-like situational awareness in dynamic, occlusion-heavy environments. Existing datasets, such as AudioSet’s 632-class, 2-million-clip ontology, provide a broad foundation for sound classification but fall short for robotic applications due to limited spatial metadata, sparse robot-centric events, and insufficient coverage of safety-critical sounds (research.google.com).

To address these gaps, we propose a tiered sound corpus tailored for models in embodied AI, with specifications designed to support real-time processing, spatial localization, and cross-modal integration.

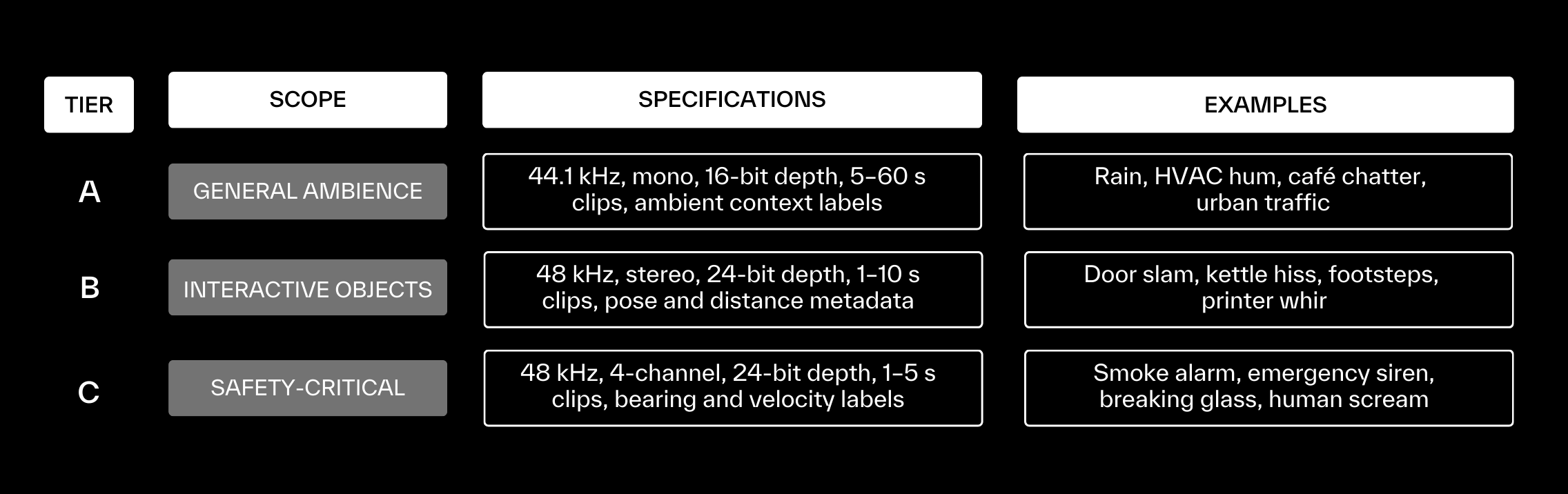

Proposed Tiered Corpus

The corpus is organized into three tiers, each targeting distinct robotic use cases: general environmental awareness, interaction with objects, and safety-critical detection. The table below outlines the scope, technical specifications, and example sounds for each tier.

Tier A: General Ambience

- Purpose: Provide robots with contextual awareness of their environment, enabling differentiation between settings (e.g., office vs. warehouse) and adaptation to ambient noise for improved speech recognition.

- Specifications: Mono recordings at 44.1 kHz suffice for capturing low-complexity background sounds. Clips range from 5–60 seconds to reflect sustained ambient conditions. Labels include environment type (e.g., indoor, outdoor, urban) and intensity (e.g., dB levels).

- Collection: Leverage crowdsourced recordings from diverse settings, augmented with synthetic noise for underrepresented environments (e.g., construction sites).

- Use Case: A robot in a hospital uses ambient cues to distinguish a quiet ward from a bustling lobby, adjusting its behavior or speech volume accordingly.

Tier B: Interactive Objects

- Purpose: Enable robots to recognize and interact with objects that produce distinctive sounds, grounding language instructions (e.g., “pick up the buzzing phone”) in acoustic cues.

- Specifications: Stereo recordings at 48 kHz with 24-bit depth capture spatial cues for basic localization. Clips of 1–10 seconds align with short, object-specific events. Metadata include object pose (relative position) and distance (e.g., 0.5–5 m) to support audio-visual correspondence.

- Collection: Use robotic platforms equipped with stereo microphones to record interactions in controlled settings (e.g., labs, homes). Leverage crowdsourced recordings from diverse settings.

- Use Case: A robot hears a kettle hissing, localizes it behind a counter, and infers it needs attention, aligning with a user’s command to “check the stove.”

Tier C: Safety-Critical

- Purpose: Equip robots to detect and respond to urgent events, such as alarms or human distress, with high accuracy and low latency, even in noisy or occluded settings.

- Specifications: Four-channel recordings at 48 kHz with 24-bit depth enable precise sound localization via beamforming and triangulation. Clips of 1–5 seconds focus on transient, critical events. Metadata include bearing (azimuth and elevation) and, where applicable, velocity (e.g., for approaching sirens).

- Collection: Requires purpose-built recording rigs with multi-microphone arrays, deployed in real-world scenarios. The amount of data for this Tier is likely much less voluminous than the other Tiers. Controlled simulations can supplement data for rare events like explosions.

- Use Case: A robot in a warehouse hears a smoke alarm, localizes it to a specific aisle, and navigates to assist or alert human workers, even without visual confirmation.

Challenges and Solutions

- Diversity: Acoustic environments vary widely due to room acoustics, weather, or cultural factors (e.g., reverberant hallways vs. open streets). Solution: Collect data from diverse global regions and use augmentation techniques (e.g., adding reverb, Doppler shifts) to simulate variations. Partner with international research groups to expand geographic coverage.

- Scalability: Collecting and annotating Tier C data is resource-intensive due to the need for controlled, high-stakes scenarios. Solution: Adopt open-source sharing models, as with UrbanSound8K-AV, to crowdsource contributions from academic and industry partners. Develop automated annotation pipelines to reduce manual effort.

- Privacy: Audio recordings may capture sensitive information, such as voices or private conversations. Solution: Implement anonymization techniques, including voice scrambling, spectral masking, or filtering out human speech. Adhere to GDPR, CCPA, and other data protection regulations, ensuring informed consent for recordings in public or private spaces.

- Quality Control: Inconsistent recording quality or labeling errors can degrade model performance. Solution: Standardize recording protocols (e.g., microphone placement, sampling rates) and use automated quality checks (e.g., signal-to-noise ratio analysis) alongside human oversight.

Smartphone-Based DePIN as a Powerful Collection Method

For Tiers A (general ambience) and B (interactive objects), smartphones offer a scalable, cost-effective solution for crowdsourcing audio data through decentralized physical infrastructure networks (DePIN). Projects like Silencio, originally designed for capturing ambient noise levels, demonstrate the potential of smartphone-based DePIN to collect diverse, geolocated audio datasets. By upgrading such platforms, we can create a global, decentralized audio corpus that is invaluable for developing robust VLA models for humanoid robots.

Why Smartphones and DePIN?

- Ubiquity: Over 6 billion smartphones worldwide provide a massive, distributed sensor network, capable of capturing audio in diverse environments (e.g., homes, offices, public spaces) without requiring specialized hardware.

- Built-in Sensors: Modern smartphones feature high-quality microphones (often 44.1–48 kHz, stereo), GPS for geolocation, and accelerometers for contextual metadata (e.g., stationary vs. moving). These enable rich, context-aware datasets for Tiers A and B.

- Decentralized Incentives: DePIN platforms incentivize users to contribute data through token-based rewards or other gamified mechanisms, ensuring scalability and user engagement. Silencio’s model of rewarding noise-level contributions can be extended to include event-based audio clips with spatial metadata.

Potential Implementation

- Platform Upgrades: Enhance existing DePIN apps like Silencio to support structured audio collection. Add features for users to record short clips (5–60 s for Tier A, 1–10 s for Tier B) with metadata like location, time, and device orientation. Integrate user-friendly interfaces to tag sounds (e.g., “café chatter,” “door slam”) or confirm automated labels.

- Data Specifications: Collect mono or stereo audio at 44.1–48 kHz with 16–24-bit depth, aligned with Tier A and B requirements. Include metadata such as GPS coordinates, ambient light levels (via phone sensors), and user-provided context (e.g., “recorded in a busy mall”). For Tier B, encourage users to record object-specific sounds with approximate distance estimates (e.g., “phone buzzing 1 m away”).

- Quality Assurance: Implement automated filters to reject low-quality recordings (e.g., clipped audio, excessive background noise). Use machine learning to pre-classify sounds and flag outliers for human review. Reward users for high-quality contributions to maintain dataset integrity.

- Privacy Protections: Apply real-time anonymization (e.g., removing speech components via spectral subtraction) before data upload. Ensure compliance with local data laws by anonymizing geolocation to coarse regions (e.g., city-level) and obtaining user consent.

Use Case Example

A user in São Paulo uses a DePIN app to record 30 seconds of street traffic (Tier A) and a 5-second clip of a bicycle bell (Tier B). The app tags the traffic clip with “urban, daytime, Brazil” and the bell clip with “2 m distance, outdoor.” These contributions, aggregated from thousands of users globally, create a diverse dataset that trains robots to recognize ambient contexts and object interactions across cultures and environments.

Advantages Over Traditional Methods

- Scale: DePIN leverages millions of users, far surpassing the reach of robot-based or static-array collection.

- Cost: Smartphones eliminate the need for deploying dedicated hardware, reducing costs significantly.

- Diversity: Global user participation ensures coverage of varied acoustic environments, from rural to urban, indoor to outdoor.

- Real-Time Updates: Continuous data collection keeps the corpus current, capturing seasonal or event-specific sounds (e.g., holiday markets, construction peaks).

Challenges and Mitigations

- Data Bias: User demographics (e.g., urban, tech-savvy) may skew the dataset. Mitigation: Target outreach to underrepresented regions and demographics through partnerships with NGOs or local governments.

- Inconsistent Hardware: Smartphone microphones vary in quality. Mitigation: Normalize recordings to a standard format and calibrate for known device profiles during preprocessing.

- User Engagement: Sustained participation requires incentives. Mitigation: Offer tiered rewards based on data quality and frequency, integrating gamification or micro-payments.

Integration with Tier C

While smartphones are ideal for Tiers A and B, Tier C (safety-critical) requires controlled, high-fidelity recordings with precise spatial metadata. DePIN can still contribute indirectly by collecting supplementary data (e.g., urban sirens recorded by users) to pre-train models, which are then fine-tuned with purpose-built, multi-channel recordings from professional rigs.

Conclusion

Autonomous vehicles proved that audio can provide meaningful safety gains when vision falls short. Humanoid robots may similarly benefit, though the urgency and regulatory drivers are not yet in place. Audio should be treated as a complementary modality that enhances robustness.

Decentralized data collection through platforms like Silencio can lower barriers for Tier A and B datasets. Silencio’s DePIN platform could transform audio data collection by crowdsourcing diverse, geolocated soundscapes through millions of smartphones, addressing the limitations of datasets like AudioSet, which lack spatial metadata and robot-centric events.

Future work should focus on expanding this corpus with global, diverse recordings, refining low-latency architectures, and deploying rugged, cost-effective hardware like automotive-grade MEMS microphones. Collaborative efforts, inspired by automotive precedents and powered by decentralized innovation, will drive this vision forward.

Bullish on Robotics? So Are We.

XMAQUINA is a decentralized ecosystem giving members direct access to the rise of humanoid robotics and Physical AI—technologies set to reshape the global economy.

Join thousands of futurists building XMAQUINA DAO and follow us on X for the latest updates.

---------------------

Co-Authored by Silencio Network

From the World's largest noise level data bank, Silencio is unlocking new ways to capture data to power the full spectrum of audio intelligence for the next generation of AI and Robotics.

References

- Waymo, Recognizing the sights and sounds of emergency vehicles, company blog, 2017. (waymo.com)

- Bosch Research, Embedded siren detection, 2025. (bosch.com)

- Sieracki et al., Seeing with Sound: AI‑Based Detection of Participants in Automotive Environment from Passive Audio, Renesas white paper, 2024. (renesas.com)

- Sun et al., Emergency Vehicles Audio Detection and Localization in Autonomous Driving, arXiv 2109.14797, 2021. (arxiv.org)

- Florentino et al., A Dataset for Environmental Sound Recognition in Embedded Systems for Autonomous Vehicles, Sci Data 12:1148, 2025. (pmc.ncbi.nlm.nih.gov)

- Sensors 25(3):793, Enhancing Road Safety with AI‑Powered Detection and Localization of Emergency Vehicles, 2025. (mdpi.com)

- Gemmeke et al., AudioSet: An Ontology and Human‑Labeled Dataset for Audio Events, ICASSP 2017; dataset portal accessed 2025. (research.google.com)

- Lu et al., Unified‑IO 2: Scaling Autoregressive Multimodal Models with Vision, Language, Audio, and Action, CVPR 2024. (arxiv.org

Owner:

.png)